The Chat GPT Problem No One Is Talking About

Centralizing your knowledge.

Chat GPT.

Everyone's talking about it.

A chat bot using the GPT-3 language model to generate human-like texts.

Ask it anything and it outputs you the answer in a couple seconds.

Like when I asked it for a regex to search for Gmail addresses and excluding Yahoo addresses in a text file. Really cool!

It’s a great tool, you can use it to summarize complex subjects in a couple lines. You can even ask it to generate computer code. It nicely curates content in a digestible way.

And it’s definitely disruptive, some are happy that it makes their job easier. Others are worried it will replace them.

In the midst of all the excitement and worry surrounding it.

There’s a bigger problem.

The Problem

There’s an issue that many are ignoring, the elephant in the room:

Does centralizing your source of knowledge a good idea?

Putting your knowledge in the hands of corporations like OpenAI is dangerous. Centralizing your source of knowledge to a chat bot isn’t liberating. In fact, it limits your scope of knowledge and beliefs to a single source.

Do you want a bot to decide what you should believe?

Do you want a bot to decided what’s right and wrong for you?

Blindly following Chat GPT or any chat bot slowly strips your critical thinking away.

You could argue that blindly following a bot is stupid, but that’s you. What about the masses that will?

Imagine living in a dystopian world where bots are seen as gods. Knowledge, belief and narrative controlled by a single entity, corporate or government. And I believe this is becoming a reality.

Let’s not forget, abuse in tech is pretty common, as we’ve seen in the last couple years. Big tech abusing their power for profit.

There’s certainly a possibility of chat bots being used to control the narrative. And that risk shouldn’t be ignored.

Chat GPT Biases

And one way narratives can be manipulated is through biases. As benign as they seem, they shouldn't be ignored and swept away.

Saying Chat GPT’s algorithm is neutral is wrong in the first place, as shown in this article.

Biases can indirectly be manipulated by bad actors through training data.

Which all comes down to data transparency, not knowing on how neutral the data used to train a language model is a real problem. Train a bot on left leaning data, you get a left leaning bot. Train a bot on right leaning data, you get a right leaning bot. And so on and so forth.

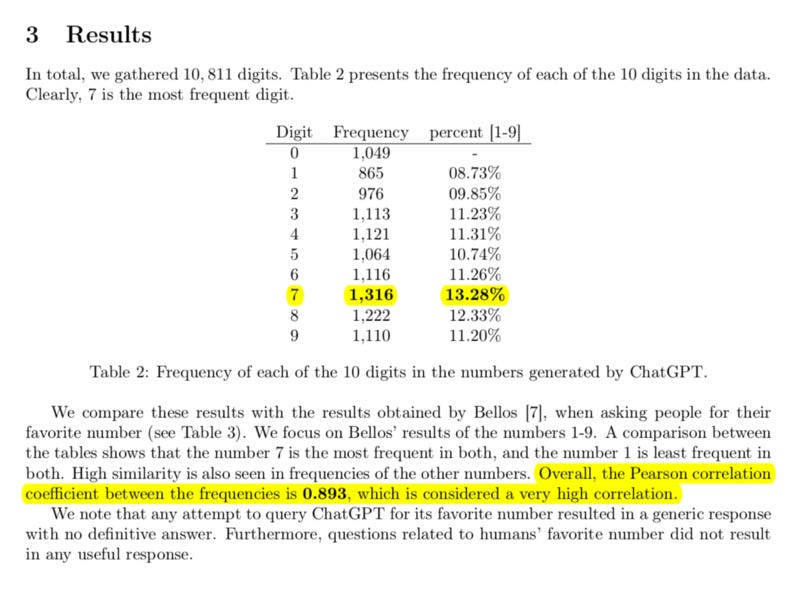

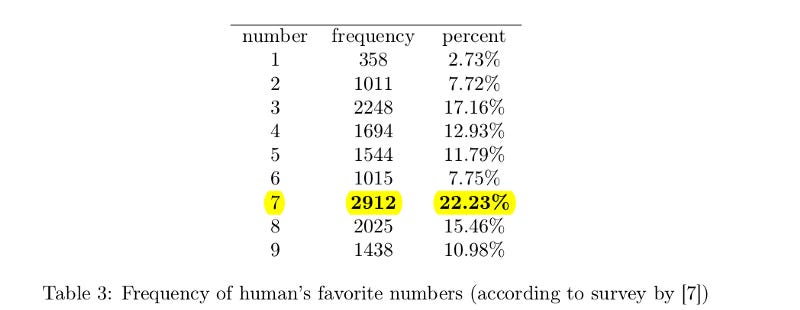

Chat GPT biases are also demonstrated in this study, when asked what is its favorite number 10,811 times. Seven comes up as the most common answer, same as us humans.

Biases can be created by manipulating training data of a language model, intentionally or not.

That just shows how biases can easily creep in ML/AI software. And if used by bad actors, can be exploited in many ways.

And how to solve that? Regulations, more transparency, who knows.

Have an amazing week!